Visa just dropped a mother of all AI payment demos. Visa gets agentic commerce. The card network dropped their 2025 announcements this morning and the implications are significant. Surprised to see pretty much everything on my wishlist and more.

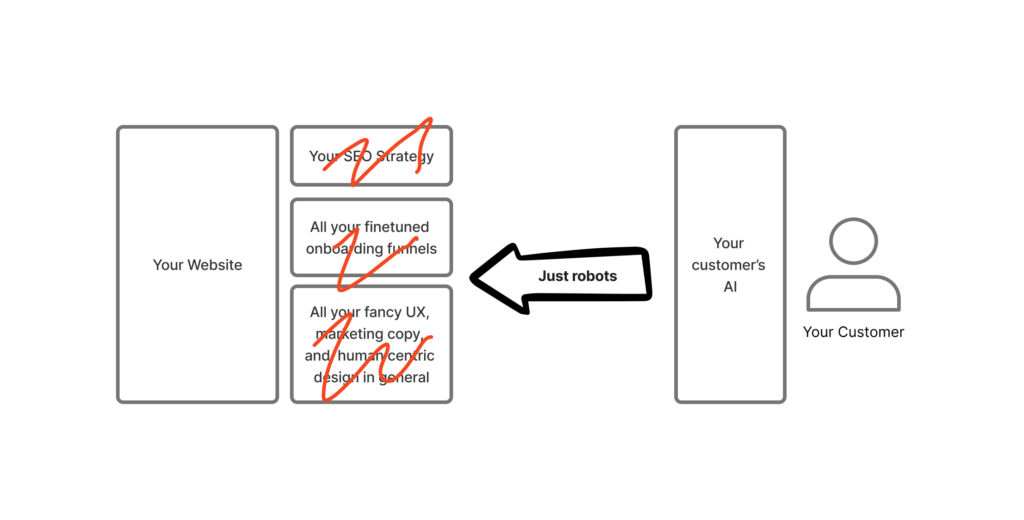

Here’s the thing. AI services are already disrupting how people discover, evaluate and make decisions for what they buy, particularly for high-consideration and high-intention purposes. And this trend is only going to accelerate as agentic capabilities get stronger. But you can’t buy something through ChatGPT and you can’t (and shouldn’t!) just hand chatGPT your raw credit card info and hope for the best.

For better or worse, AI flows are non-deterministic, they can make mistakes, and there are real security and data privacy concerns when commingling different LLM plugins (aka MCPs) from different sources into an agentic workflow. Like the common example of researching and booking a travel itinerary.

The solution we need is that you want to give the AI a more tightly-controlled payment credential, not a blank check.

- start with a trusted AI provider, then provision that AI provider with a tokenized card number in lieu of your real one. Visa demonstrated this step with consumer tapping a card to their phone, just like adding a card to Apple Pay wallet.

- Once provisioned, the card only activates through express consumers approval, enabling that token for limited time, merchant scope, payment limits etc.

- Then have the payment network itself is able to enforce that any transactions to that token expressly match the purchase intent expressly approved by the consumer for that session.

Honestly, I was not expecting the card networks to be this forward thinking yet, it feels like somebody cooked here. Maybe partnerships with OpenAi, Anthropic, Perplexity etc. will do that 🙂